IBM has launched a software service that it claims can explain how AI makes decisions “as the decisions are being made”.

The company said its cloud-based tool also “automatically detects bias” in enterprise AI deployments.

Artificial intelligence – in its application of deep learning neural networks, complex algorithms and probabilistic graphical models – is often considered a ‘black box’ that can’t explain how it reached its output decision.

Although IBM is pitching the new tool as a “major step in breaking open the black box of AI” it doesn’t quite explain the decision-making process in human terms. Making, say, neural networks less opaque is a challenge that continues to confound researchers.

Instead the explanation of how an AI-made decision is reached is drawn from “an analysis of inputs and outputs,” IBM’s cognitive computing and analytics partner Jason Leonard told Computerworld.

The tool automatically logs data that is processed by a given model – most of the popular AI build environments are covered including Watson, Tensorflow, SparkML, AWS SageMaker, and AzureML – which enables “complete traceability of all decisions and predictions, and full data and model lineage”.

“For a given model, it should be possible to identify the team who built it and the datasets they used to train it, as well as the inputs it received in production and the outputs it produced,” Leonard added.

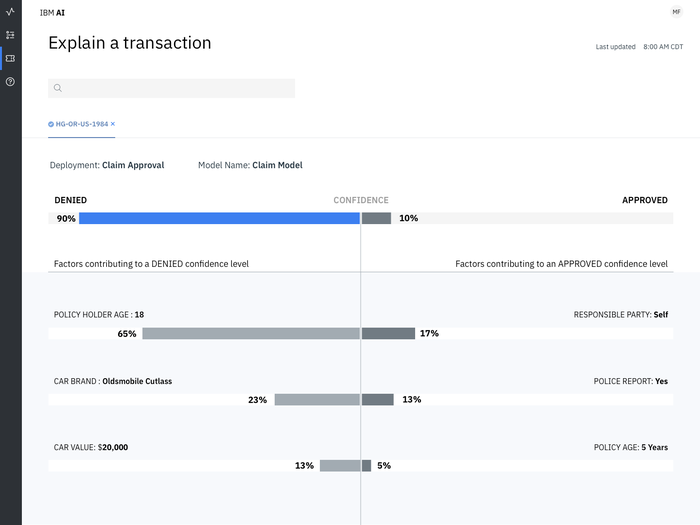

Screenshots provided by IBM show a dashboard entitled ‘explain a transaction’ for an insurance claim approval. It lists factors contributing to a denied loan lined up against those contributing to an approved loan.

“So for a loan your credit history may be a bit dodgy so that may be a negative contributing factor, however your job is super high paid and you’ve been in that job for two years so that’s a positive contributing factor,” Leonard said.

Users get not only the factors that weighted the decision one way or another but also “the confidence in the recommendation, and the factors behind that confidence,” Leonard said.

In a similar way, the tool can also ‘detect bias’ based on ‘fairness attributes’ determined by the user.

“When people describe big data – they often say just grab all the data you can, throw it at the engine and see what pops out the other side. That’s essentially the approach. What are the hidden insights we can get from all this data?” said Leonard.

“So if you’ve got say [an individual’s] gender, the default practice is to use it so that can lead to unexpected bias,” he added.

The tool allows users to pick out features of an AI’s training data – say gender or age – to see how heavily it affects outcomes. Bias thresholds can also be set.

“You can ask ‘how has it been going with this group of people compared with that group’. Gender might be same same, but people over 50 it’s discriminating against them. For a life insurance company that may be a deliberate decision, but it might be going too far and going against policy, so it would raise that to your attention,” Leonard says.

Bias checks can be performed both at build time and runtime and the tool recommends new data sets to retrain models to operate more fairly.

Better the bias you know

The likes of Microsoft, Accenture and Google have all released consumer versions of AI bias detectors in recent months. Elements of IBM's version, including a library of novel algorithms, code, and tutorials, are being made available to the open source community in an AI Fairness 360 toolkit.

For enterprise, the tools help non-technical executives have more faith in the fairness and accuracy of AI deployments, Leonard said.

While businesses have been busy running AI proof of concepts and trials, there is still some apprehension about their wider roll-out, Leonard explained.

“[They ask] Will it work? I have the assurances from the technical people but how do I know for sure? And how do I monitor it to ensure my job won’t be on the line if I go ahead with this?” he said.

According to an IBM survey of 5000 C-Suite executives, released this week, some 60 per cent of them consider regulatory constraints a barrier to implementing AI.

Heavily regulated sectors and government will be a key market for the tool, Leonard said.

In the documentation for the tool, IBM says that “if a customer or regulator requests the reasoning behind a particular conclusion, the lender can easily explain how the model contributed to the decision”.

Asked whether businesses really wanted to know the biases in their use of data, Leonard said it was always better to be aware.

“You mean, what if there’s not plausible deniability anymore? I think a business would sooner know about it then be caught out after the fact – having been using that factor that they shouldn’t have been using for the past x number of years and then have a massive class action against them,” he said.

“Putting it in a positive way, if they do know these things, they can establish a greater level of trust with their customers and ratchet themselves up against their competitors.”

In the future there is potential for the tool’s fairness and bias outputs to be made available to a business’ customers to earn their trust, Leonard added.